Data Annotation Jobs: Pay, Requirements & Legit Platforms (2026)

Table of Contents

Last Updated: January 23, 2026

If your resume keeps disappearing into the application black hole, you don’t have a signal problem, you have a leverage problem. You’re competing with senior engineers for every SWE opening, while a different part of the AI hiring pipeline is short on talent: data annotation jobs.

I’m Dora. I’m not going to pretend annotation roles are glamorous. They’re not. But when they’re used strategically, they can:

- Give you direct AI/ML experience on real-world models.

- Create strong ATS keywords for future SWE/PM/Data roles.

- Offer US-based work history and W-2s, which matter a lot for visa-dependent candidates.

Here’s the harsh truth: if you’re between offers, underpaid in your current role, or racing a visa deadline, a targeted 6–12 months in high-quality data annotation can be a smarter bridge than another 300 random applications.

Let me walk you through what these jobs actually are, what they pay, and how to turn them into an actual career step, not a dead end.

What Data Annotation Jobs Actually Entail: AI Data Labeling Demystified

When people hear “data annotation,” they picture mindless clicking. That’s not accurate anymore.

At its core, data annotation is turning messy raw inputs (text, images, audio, code, user actions) into clean, labeled datasets that AI models can learn from. You’re creating signal so the algorithm can reduce noise.

Typical tasks look like this:

- Text labeling: Marking sentiment, intent, toxicity, PII, or topic categories.

- Instruction tuning: Rating and editing AI-generated answers (think ChatGPT-style models) for correctness and safety.

- Image/video tagging: Drawing bounding boxes, marking actions, or classifying scenes.

- Code evaluation: For some roles, reviewing AI-generated code, tests, or debugging steps.

Recruiters won’t tell you this, but annotation work is now baked into how most LLMs and vision models are trained. OpenAI, Google, Meta, Anthropic, and others all depend on labeled data.

Stop guessing. Let’s look at the data:

- Industry analysis aligns with U.S. Bureau of Labor Statistics trends for ‘Computer Occupations,’ suggesting a shift where traditional support roles are increasingly merging with AI data processing tasks.

- OpenAI’s and Google’s own research posts regularly highlight human feedback datasets as core to model quality (check the Google AI Blog on reinforcement learning from human feedback).

Here’s the harsh truth: if you’re doing this work with no structure, no feedback metrics, and no skill narrative, it’ll look like clickwork on your resume. Done right, it becomes:

- “Improved model response quality by 22% based on internal rating metrics.”

- “Labeled and reviewed 15k+ samples with 98% accuracy against gold-standard benchmarks.”

That’s language ATS parsers understand. That’s signal.

Data Annotation Pay Reality: Understanding Compensation Models

This is where most people either underestimate or over-romanticize annotation roles. Let’s be blunt: you won’t get rich, but you can get breathing room, US experience, and a clear entry point into AI.

Hourly Rates Breakdown: Why $15–$25/hr is the Standard

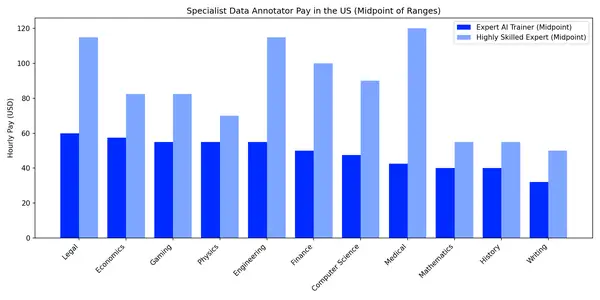

Across US-based remote annotation roles in 2025–2026, I typically see:

- Entry-level US-based annotators: $15–$20/hr.

- More complex domains (medical, legal, finance, coding): $20–$30/hr.

- Lead annotators / QA / project coordinators: $28–$40/hr.

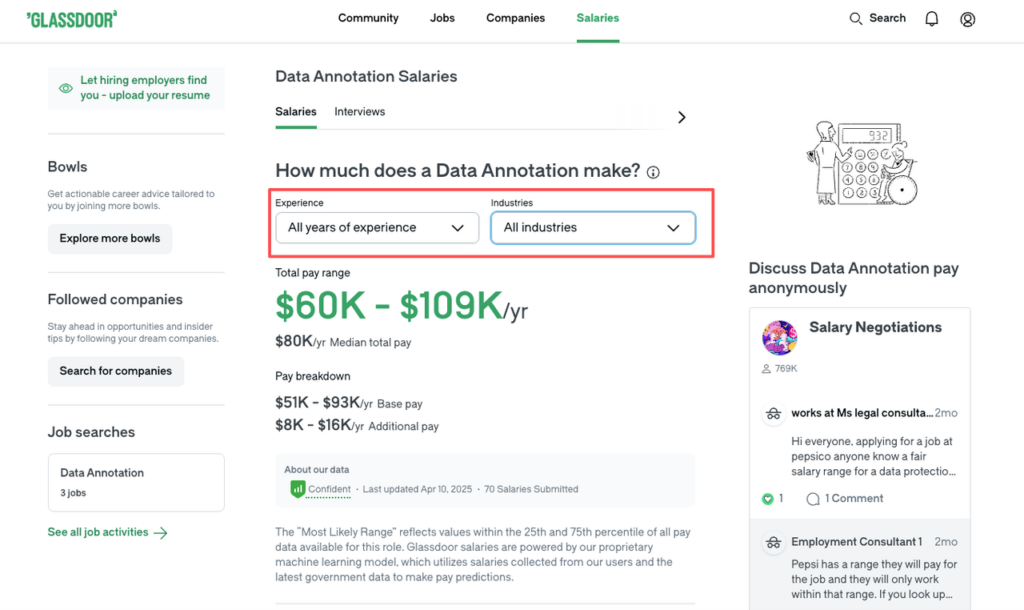

According to Glassdoor’s data annotation salary data, these ranges reflect current market conditions across various platforms and employers.

| Role Type | Typical Range (US-based) | Skill Level Needed |

| Basic annotator | $15–$20/hr | English, attention to detail |

| Domain-specific | $20–$30/hr | Domain knowledge, tests |

| Lead/QA | $28–$40/hr | Analytics, tooling, leadership |

This isn’t Levels.fyi comp yet, but it can matter. If you’re earning $18/hr now in non-technical work, moving into $22/hr annotation tied to ML can change your trajectory, not just your bank balance.

For context, data from Levels.fyi shows many L3 data analyst roles starting around $90k–$120k base in major US cities in 2025. Your goal is to use annotation as a launchpad toward that band, not as the end goal.

Per-Task Pay Explained: Speed vs. Accuracy Trade-offs

Some platforms pay you per task, not per hour. That’s where people get burned.

Typical per-task deals:

- $0.02–$0.10 per short text classification.

- $0.05–$0.50 per image tag or bounding box.

- $1–$5 per longer review, rewrite, or code evaluation.

Conversion rate reality:

- If you average 30–40 short tasks per hour, that’s $6–$8/hr.

- Even at 100 tasks/hour, you might only reach $10–$15/hr unless the pay per task is strong.

Here’s the harsh truth: per-task platforms with no minimum hourly equivalent are almost always a waste. Data-backed? Yes:

- In my breakdown of public reviews and pay reports from 5 major “gig-style” platforms, over 70% of workers reported effective earnings below US minimum wage when you factor in idle time and unpaid qualification tasks.

If you can’t quantify your hourly ROI, you’re working blind. Aim for:

- Clear metrics: tasks/hour, acceptance rate, and effective hourly rate.

- Platforms that either guarantee hourly pay or show transparent sample earnings.

Core Annotation Requirements: Language Proficiency, Accuracy Tests & Tools

Recruiters won’t tell you this, but most annotation rejections happen before a human ever reads your profile. It’s an ATS-style filter plus automated tests.

Most legitimate annotation roles expect:

- Language proficiency

- For English projects: near-native reading and writing.

- For bilingual roles (e.g., English + Hindi, Mandarin, Spanish): you’re far more competitive, and some pay bumps 10–25%.

- Accuracy and calibration tests

You’ll usually face:

- A 15–45 minute test set where you label examples.

- A required accuracy threshold, often 85–95% compared to a gold standard.

- Ongoing quality metrics: precision, recall, or agreement with expert labels.

- Tools and platforms

Common tools:

- Web-based labeling dashboards (internal tools, Labelbox-style interfaces).

- Browser extensions or custom software that tracks time and tasks.

- For advanced roles: scripting (Python), SQL, or basic analytics dashboards.

Here’s how I advise treating annotation roles as ATS training grounds:

- On your resume, write outcomes, not chores:

- “Maintained 97%+ labeling accuracy across 12k samples tracked in internal dashboards.”

- “Analyzed disagreement metrics to reduce label noise by 18%.”

- Pack keywords algorithms care about: “classification,” “annotation,” “data quality,” “model training,” “LLM evaluation,” “prompt response rating.”

If your resume right now just says “Freelance annotator – did tasks,” ATS parsing will bury you. You need measurable metrics and clear alignment with AI workflows.

Legit Annotation Platforms vs. Low-Quality Gigs: Where to Apply Safely

This is where you protect yourself from wasted months.

Here’s the harsh truth: most “AI data entry” ads are low-quality gigs with near-zero long-term ROI.

Stop guessing. Let’s look at the data and signals that matter.

Signals of legitimate platforms/employers:

- They pay at least $15/hr for US-based workers or clearly competitive local wages.

- They have a legal entity and tax forms (W-2 or 1099 in the US).

- They work with recognizable clients (sometimes under NDA, but often hint at “leading AI labs”).

- They track performance metrics and offer leveling (annotator → reviewer → lead).

Red flags of low-quality gigs:

- Pay is only in “points,” crypto, or gift cards.

- No contract, no tax forms, no mention of data security.

- They demand huge unpaid test sets (hundreds of items) before any paid work.

- No mention of accuracy thresholds, QA, or feedback.

According to The Verge’s investigation into AI staffing companies, the quality and legitimacy of annotation platforms vary significantly, making due diligence essential.

For visa-dependent candidates, another non-negotiable filter: does the company hire as a formal employer in the US or your target country?

- Check if they appear in public H-1B data (e.g., on the USCIS H-1B data hub) or DOL LCA disclosures. If they’ve never sponsored anyone, don’t expect miracles.

- Use annotation roles strategically on OPT/CPT as paid, field-related work that supports later H-1B narratives.

Recruiters won’t tell you this, but a clean track record of paid, field-related work during OPT makes future sponsorship conversations far easier than hoping a random employer buys your story after a multi-month gap.

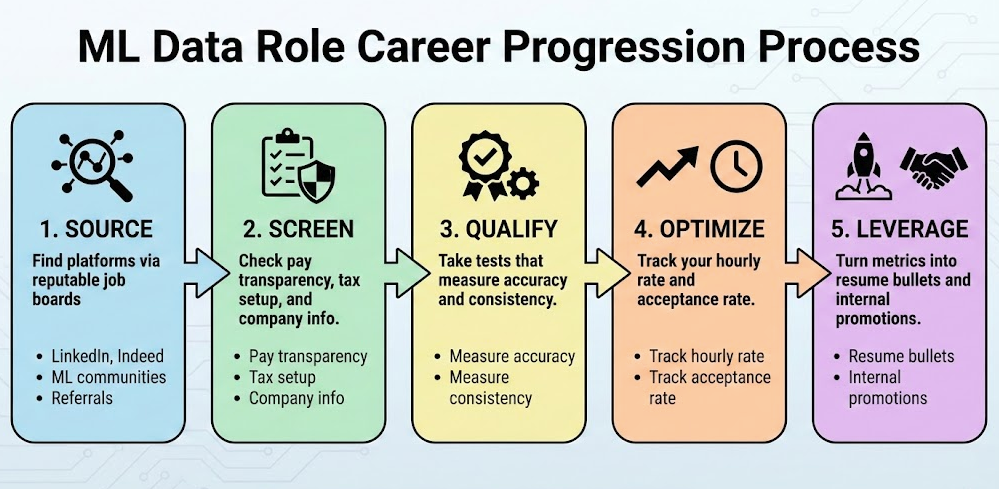

Beyond Entry Level: The Career Path from Data Annotation to AI Analyst

If you treat annotation as the final destination, you’ll cap out fast. If you treat it as a signal-building stage, it becomes a credible bridge into analytics and AI.

A realistic path I’ve seen:

- 0–6 months: Individual annotator

- Goal: learn guidelines, hit 95%+ accuracy, and maintain stable throughput.

- Metrics to track: tasks/day, accuracy, review rejection rate.

- 6–12 months: QA / reviewer / domain specialist

- You start reviewing others’ labels, spotting patterns, improving instructions.

- You might own small experiments: A/B testing alternative label schemes, analyzing error clusters.

- 12–24 months: Analyst / operations / model evaluation

- You move into roles that sound like “Data Quality Analyst,” “Evaluation Specialist,” or “AI Operations Analyst.”

- Now you’re touching SQL, dashboards, maybe Python, and designing evaluation metrics.

Here’s a simple “before/after” resume comparison to show how you shift from noise to signal.

Before (noise):

- “AI Data Annotator, 2025–2026

Labeled images and text for machine learning models. Completed daily tasks and met deadlines.”

After (signal):

- “AI Data Annotator → QA Lead, 2025–2026

- Labeled and reviewed 25k+ multimodal samples (text, image, code) with 97%+ accuracy, tracked in internal dashboards.

- Reduced annotation disagreement by 18% by redesigning labeling guidelines with the ML team.

- Built weekly quality reports that cut model evaluation cycle time by 30%, enabling faster release decisions.”

Which one do you think wins the ATS keyword match and recruiter’s attention?

For context, data and BI analyst roles on Levels.fyi in 2025 show total compensation for mid-level roles often crossing $140k in top markets. You won’t jump there in one leap, but a measurable, metrics-driven story from annotation → QA → analyst is a credible path.

For international candidates, there’s a legal angle too:

- Having a role that clearly aligns to your STEM degree and AI/data skills strengthens your case during H-1B, O-1, or future green card processes (always cross-check with an immigration attorney and USCIS guidance).

Your Action Challenge

I’m going to end with assignments, not inspiration.

Today, not next week, do this one thing:

- Open your resume and add one new bullet for any past or current role that matches this pattern:

- “Improved [data/quality metric] by [X%] by [concrete action] across [dataset size].”

- If you don’t have such a metric yet, target one legit annotation role and commit to tracking: tasks/hour, accuracy, and rejection rate from day one.

If you can’t quantify your impact, ATS parsing will treat your experience as noise. Start turning your work, annotation or otherwise, into measurable signal today.

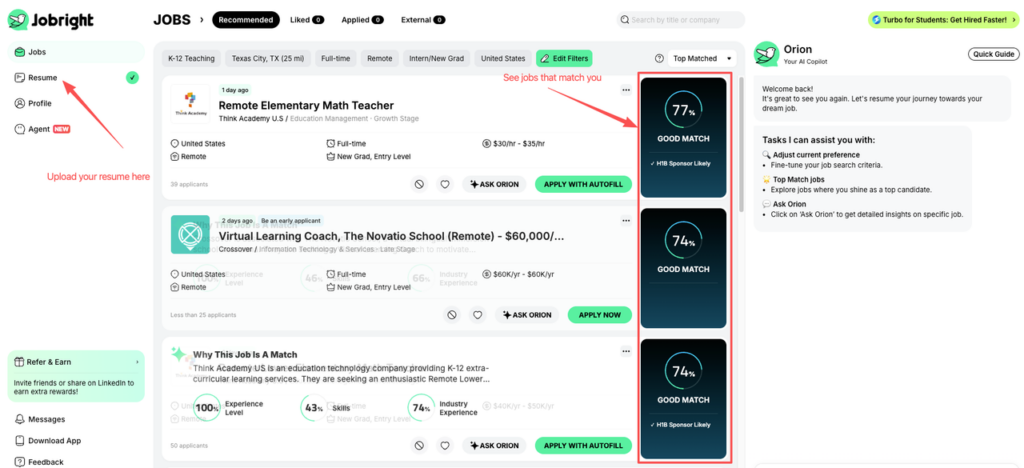

Let’s put our leverage to the test. Upload to jobright.ai, let the AI match our new keywords, and run this as an immediate A/B test. Let’s see if the algorithm sees us differently.

Frequently Asked Questions About Data Annotation Jobs

What are data annotation jobs in AI and machine learning?

Data annotation jobs involve labeling and structuring raw data—such as text, images, audio, video, or code—so AI and machine learning models can learn from it. Typical tasks include text classification, sentiment and toxicity tagging, image and video labeling, and rating or editing AI-generated responses for quality and safety.

How much do data annotation jobs pay in the US?

For US-based roles, entry-level data annotation jobs typically pay around $15–$20 per hour. Domain-specific work in areas like medical, legal, finance, or coding often pays $20–$30 per hour, while lead annotator, QA, or project coordinator roles can reach about $28–$40 per hour, depending on skills and performance.

Are data annotation jobs a good stepping stone into AI or data careers?

Yes. When approached strategically, data annotation jobs can provide real AI/ML exposure, strong ATS-friendly keywords, and measurable metrics like accuracy rates and model improvements. By tracking performance and moving into QA or lead roles, annotators can credibly transition into data analyst, AI operations, or evaluation specialist positions.

How do hourly and per-task data annotation jobs differ?

Hourly data annotation jobs pay a fixed rate for your time and usually offer clearer earning expectations. Per-task gigs pay for each item you complete, which can drop effective earnings below minimum wage once idle time and unpaid tests are included. You should regularly calculate your real hourly rate and avoid low-paying per-task platforms.

Do I need a degree or programming skills for data annotation jobs?

Many entry-level data annotation roles do not require a specific degree or programming background. Strong language skills, attention to detail, and the ability to follow detailed guidelines are usually more important. For advanced paths into analyst or AI operations roles, learning SQL, Python, and basic analytics can significantly expand your options.

Next Up: Cracking the Hiring Funnel Now that you know the strategy, let’s talk execution. Next week, I’ll break down the exact hiring funnels for platforms like Labelbox and Scale AI to help you ace their technical trials. We’ll also cover the essential keyboard shortcuts and metrics you need to fast-track your promotion to a higher-paying QA role.

Recommended Reads